Concept Overview

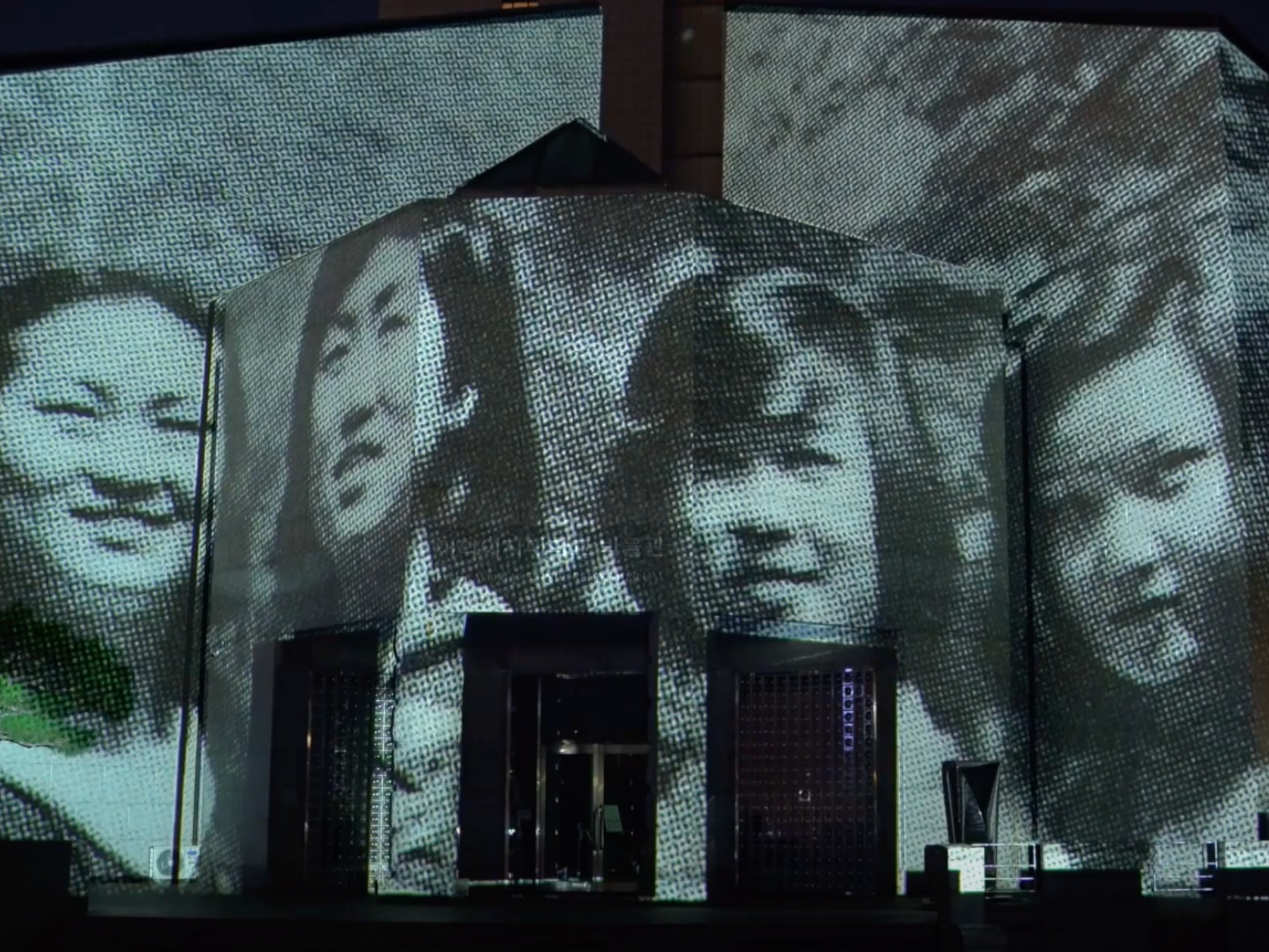

Data Mirror is an interactive spatial-audio installation that transforms collective presence into evolving sound and image, showing how shared spaces and the people within them continuously reshape one another. It is an immersive spatial-audio and visual installation that places participants inside a reactive matrix-like environment driven by movement, proximity, and sonic interaction. A multistem musical composition plays through a spatial audio array, while visuals projected across the front wall reveal the system's internal "data world," a 3D space containing matrix-inspired effects, a stylized humanoid avatar, and a virtual representation of the speaker array.

AI-generated perspective of project concept

Project Schematic, signal flow including top view

Participants can manipulate sound using a Nulea M512 Wireless Trackball Mouse, which controls real-time spatialization within Unity. They can cycle through several stem combinations (drums, bass, harmony, melody) and control how those stems are spatially mirrored through different panning patterns. Stem loops come from eight pre-built musical sets created in Ableton, which progress throughout the installation.

The average position of each person adjusts the bpm of the loop (for example, the bpm increases as the spread of each person's planar (x, y) position decreases, almost as if energy rises when atoms get concentrated).

An Intel RealSense depth camera analyzes crowd movement and estimates a real-time center of mass. This position data modulates the global tempo. As users group together or spread apart, the music subtly shifts, reinforcing the idea that the audience, the environment, and the composition form a shared, responsive ecosystem.

Python Script Explanation

The computer vision system tracks people in real-time video and translates their spatial distribution into sound control parameters. Using OpenCV's background subtraction and a custom tracking algorithm, the system detects and follows individuals across frames, calculating three key metrics: the number of people present, their horizontal center of mass (ranging from far left to far right), and the degree of spatial clustering. These metrics are sent as OSC messages to Max for Live at a configurable frame rate, enabling the crowd's physical arrangement to directly influence audio parameters—creating an interactive installation where human movement and positioning dynamically shape the sonic environment.

Dolby Atmos, spatial panning, object-based audio in Dolby Atmos Renderer

Our motivation for creating Data Mirror grew from several intersecting influences that shaped both the aesthetics and the technical design of the installation. We were inspired by The Matrix, particularly its visual language and its exploration of digital embodiment, perception, and identity. We were also motivated by Dolby Atmos, especially its object-based spatial panning and mirrored panning techniques, which shaped how we approached sound as a movable, expressive entity within space.

Alongside these inspirations, our earlier work with spatial panning via OSC naturally evolved into a unified concept. These combined ideas shaped Data Mirror into an environment that reflects how people interact with digital systems and how their presence continuously transforms sound and image in real time.

Goals and Learning Outcomes

Audience Goals

- Feel empowered and challenged by controlling spatial audio that responds to their actions.

- Understand how collective movement alters the sonic and visual environment, even when one person believes they are in control.

- Experience sound as a malleable and reactive substance rather than a fixed playback.

Creator Learning Goals

- Develop a portable and repeatable workflow for multichannel spatial installations.

- Gain a deeper understanding of IEM spatialization, OSC routing, and Unity to DAW communication.

- Prototype interaction models that may extend into future VR and MR spatial music research.

Role of Interactivity and Media

Interactivity is central to the meaning of Data Mirror.

- Sound: Stems move based on user input and spatial mapping, creating a strong sense of agency.

- Visuals: Matrix-style shaders, reactive colored orbs, and a humanoid data avatar reflect participant influence.

- Shared presence: Crowd movement shapes global musical parameters, demonstrating how individual and collective behaviors influence the system.